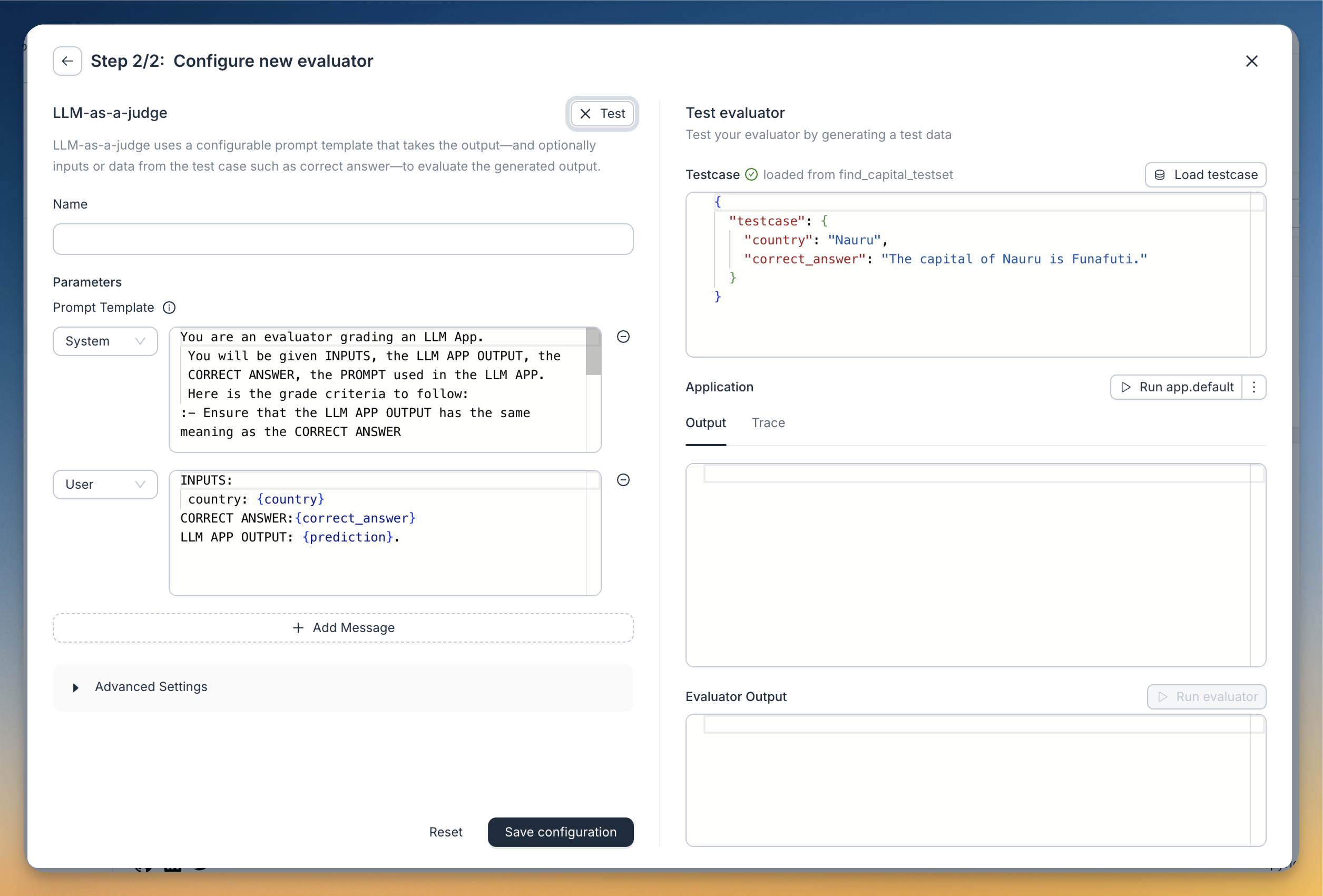

LLM-as-a-Judge

LLM-as-a-Judge is an evaluator that uses an LLM to assess LLM outputs. It's particularly useful for evaluating text generation tasks or chatbots where there's no single correct answer.

The evaluator has the following parameters:

The Prompt

You can configure the prompt used for evaluation. The prompt can contain multiple messages in OpenAI format (role/content). All messages in the prompt have access to the inputs, outputs, and reference answers (any columns in the test set). To reference these in your prompts, use the following variables (inside double curly braces):

{{correct_answer}}: the column with the reference answer in the test set (optional). You can configure the name of this column underAdvanced Settingin the configuration modal.{{prediction}}: the output of the llm application{{$input_column_name}}: the value of any input column for the given row of your test set (e.g.{{country}})

If no correct_answer column is present in your test set, the variable will be left blank in the prompt.

Here's the default prompt:

System prompt:

You are an evaluator grading an LLM App.

You will be given INPUTS, the LLM APP OUTPUT, the CORRECT ANSWER used in the LLM APP.

<grade_criteria>

- Ensure that the LLM APP OUTPUT has the same meaning as the CORRECT ANSWER

</grade_criteria>

<score_criteria>

-The score should be between 0 and 1

-A score of 1 means that the answer is perfect. This is the highest (best) score.

A score of 0 means that the answer does not any of of the criteria. This is the lowest possible score you can give.

</score_criteria>

<output_format>

ANSWER ONLY THE SCORE. DO NOT USE MARKDOWN. DO NOT PROVIDE ANYTHING OTHER THAN THE NUMBER

</output_format>

User prompt:

<correct_answer>{{correct_answer}}</correct_answer>

<llm_app_output>{{prediction}}</llm_app_output>

The Model

The model can be configured to select one of the supported options (gpt-3.5-turbo, gpt-4o, gpt-5, gpt-5-mini, gpt-5-nano, claude-3-5-sonnet, claude-3-5-haiku, claude-3-5-opus). To use LLM-as-a-Judge, you'll need to set your OpenAI or Anthropic API key in the settings. The key is saved locally and only sent to our servers for evaluation—it's not stored there.