Integrating with agenta

Overview

Applications and prompts created in agenta can be integrated into your projects in two primary ways:

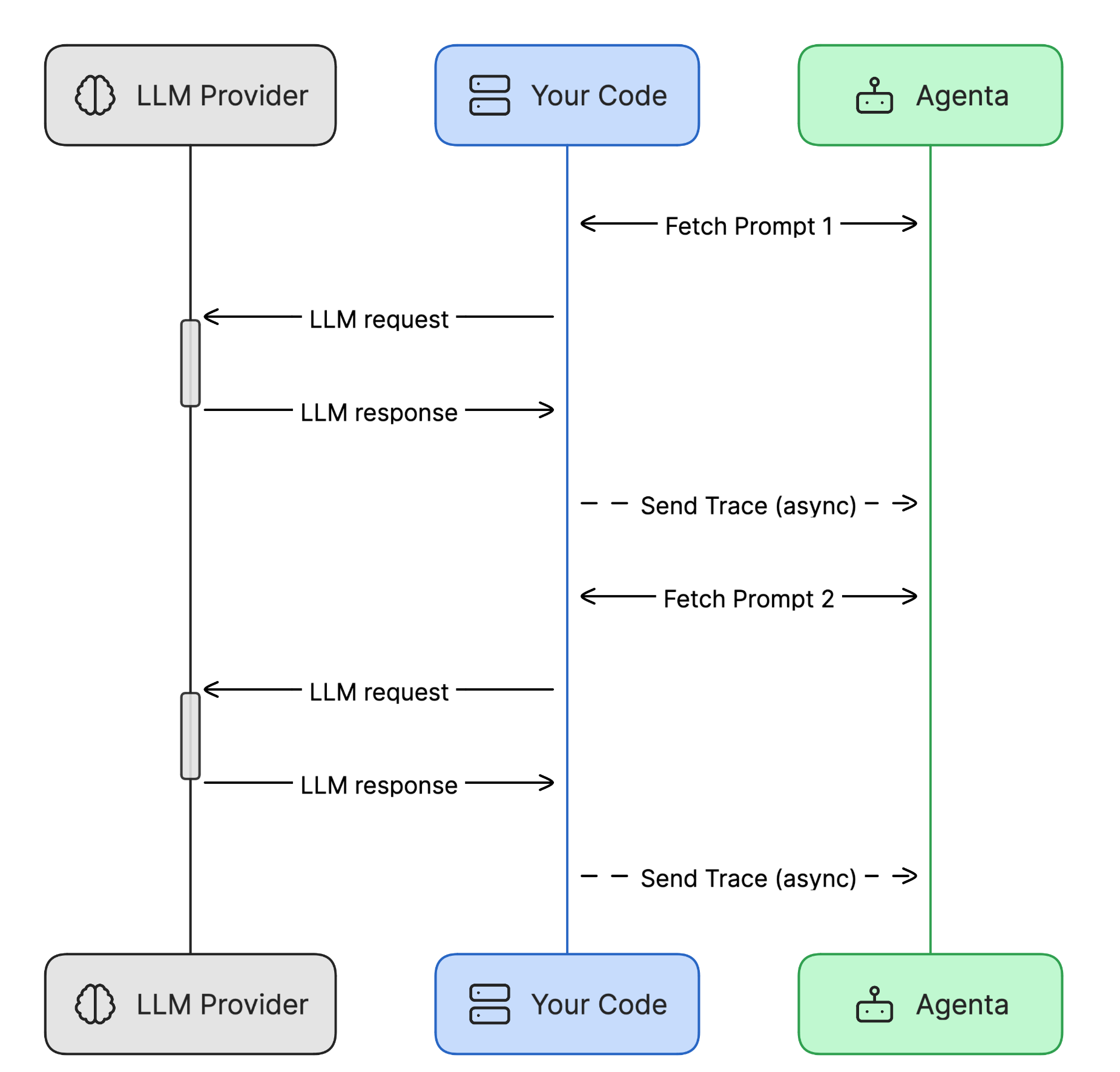

- As a Prompt Management System: Fetch the latest version of prompts/configurations from agenta.

- As a Middleware: Use the applications hosted on agenta directly.

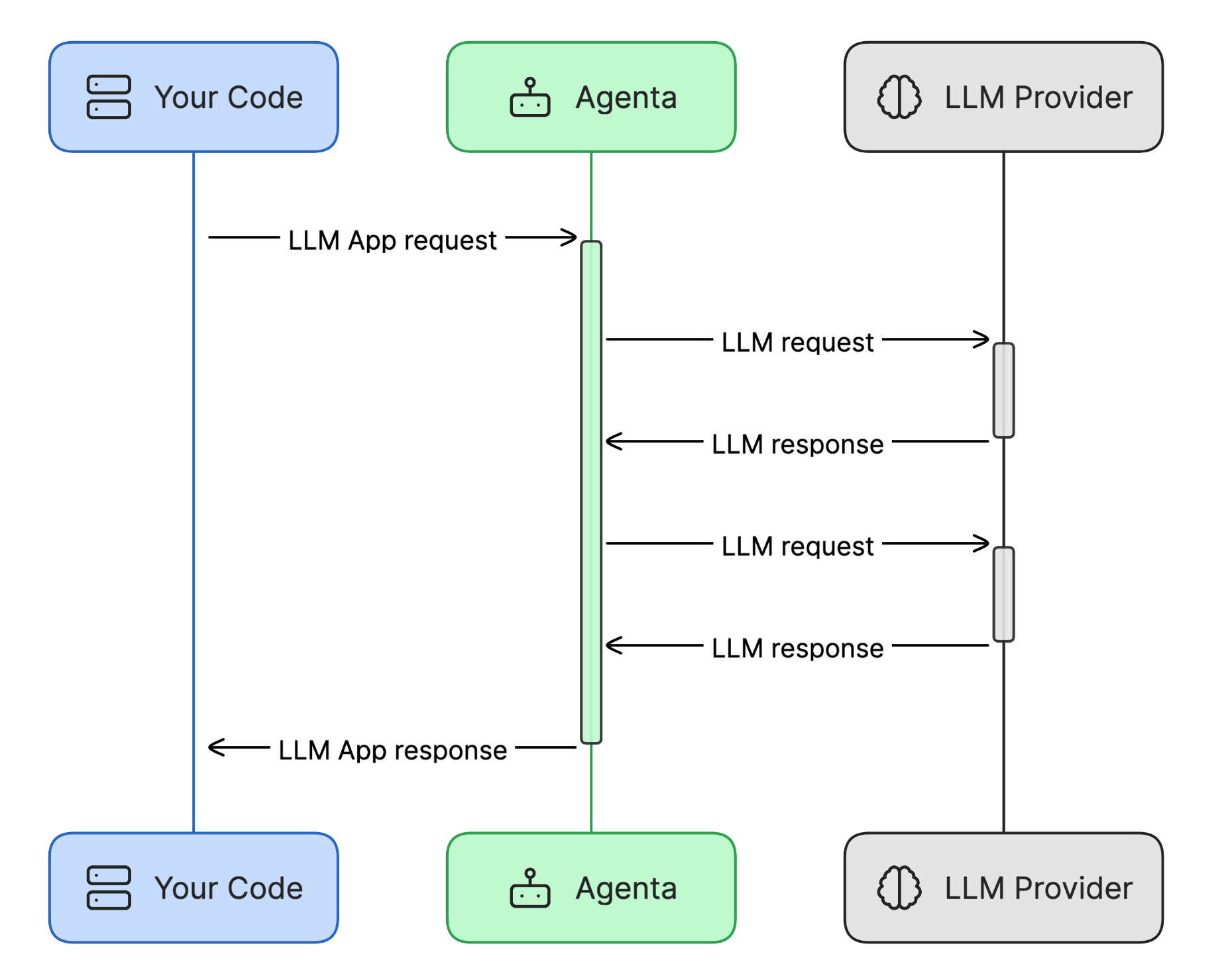

Using agenta as Middleware

When using agenta as middleware, you directly interact with applications hosted on agenta. This method is straightforward and includes built-in observability features.

Steps to Integrate:

- Navigate to the deployment view in agenta.

- Locate the API endpoints under the "Endpoints" menu.

- Use these endpoints in your application to interact with the hosted LLM apps.

The applications are fully instrumented, and all traces can be viewed in the observability dashboard.

Using agenta as a Prompt Management System

You can use agenta to manage and fetch prompts or configurations.

Step:

- Install the agenta Python SDK (

pip install agenta). - Set up environment variables:

AGENTA_API_KEYfor cloud users.AGENTA_HOSTset tohttp://localhostif you are self-hosting.

Example Code:

from agenta import Agenta

agenta = Agenta()

config = agenta.get_config(base_id="xxxxx", environment="production", cache_timeout=200) # Fetches the configuration with caching

The response object is an instance of GetConfigResponse from agenta.client.backend.types.get_config_response. It contains the following attributes:

config_name: 'default'current_version: 1parameters: This dictionary contains the configuration of the application, for instance:

{'temperature': 1.0,

'model': 'gpt-3.5-turbo',

'max_tokens': -1,

'prompt_system': 'You are an expert in geography.',

'prompt_user': 'What is the capital of {country}?',

'top_p': 1.0,

'frequence_penalty': 0.0,

'presence_penalty': 0.0,

'force_json': 0}