Use Mistral-7B from Hugging Face

This tutorial guides you through deploying am LLM application in agenta using Mistral-7B from Hugging Face.

-

Set up a Hugging Face Account

Sign up for an account at Hugging Face. -

Access Mistral-7B Model

Go to the Mistral-7B-v0.1 model page. -

Deploy the Model

Choose the 'Deploy' option and select 'Interface API'. -

Generate an Access Token

If you haven't already, create an Access Token on Hugging Face. -

Initial Python Script

Start with a basic script to interact with Mistral-7B:import requests

API_URL = "https://api-inference.huggingface.co/models/mistralai/Mistral-7B-v0.1"

headers = {"Authorization": f"Bearer [Your_Token]"}

def query(payload):

response = requests.post(API_URL, headers=headers, json=payload)

return response.json()

output = query({"inputs": "Your query here"}) -

Integrate Agenta SDK

Modify the script to include the Agenta SDK:import agenta as ag

import requests

API_URL = "https://api-inference.huggingface.co/models/mistralai/Mistral-7B-v0.1"

headers = {"Authorization": f"Bearer [Your_Token]"}

ag.init()

ag.config.register_default(

prompt_template=ag.TextParam("Summarize the following text: {text}")

)

@ag.entrypoint

def generate(text: str) -> str:

prompt = ag.config.prompt_template.format(text=text)

payload = {"inputs": prompt}

response = requests.post(API_URL, headers=headers, json=payload)

return response.json()[0]["generated_text"] -

Deploy with Agenta

Execute these commands to deploy your application:agenta init

agenta variant serve app.py -

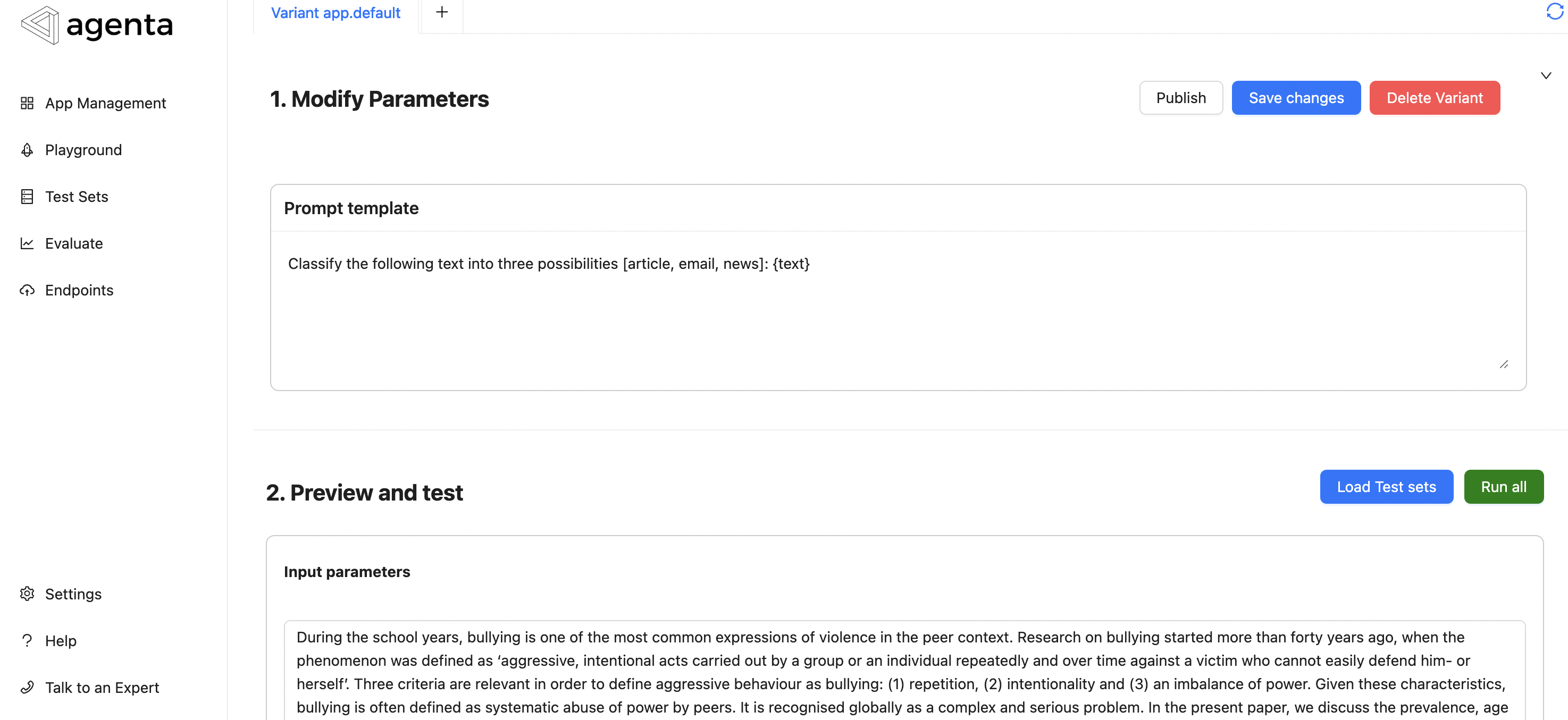

Interact with Your App

Access a playground for you app in agenta in https://cloud.agenta.ai/apps (or locally if you have self-hosted agenta).

-

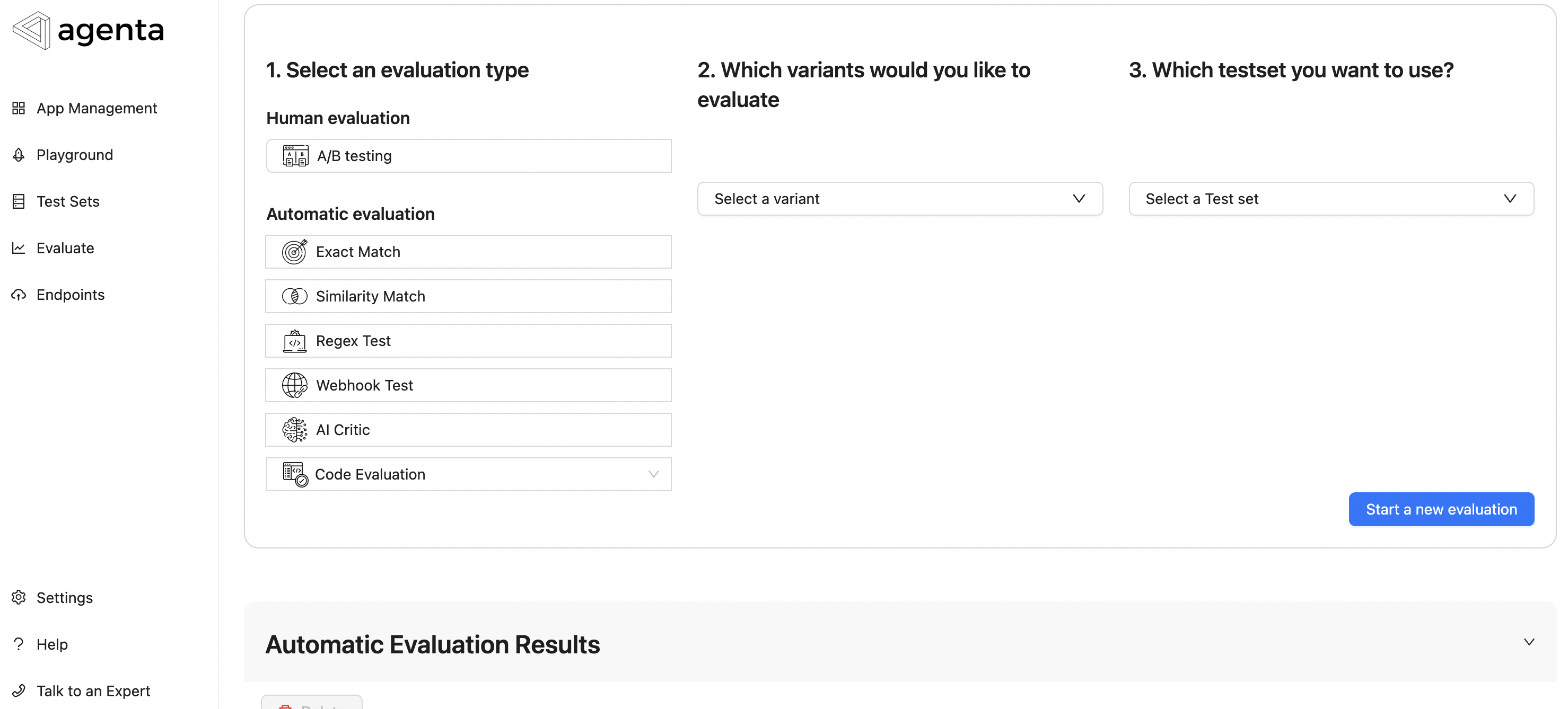

Evaluate Model Performance

Use Agenta's tools to create a test set and evaluate your LLM application.

-

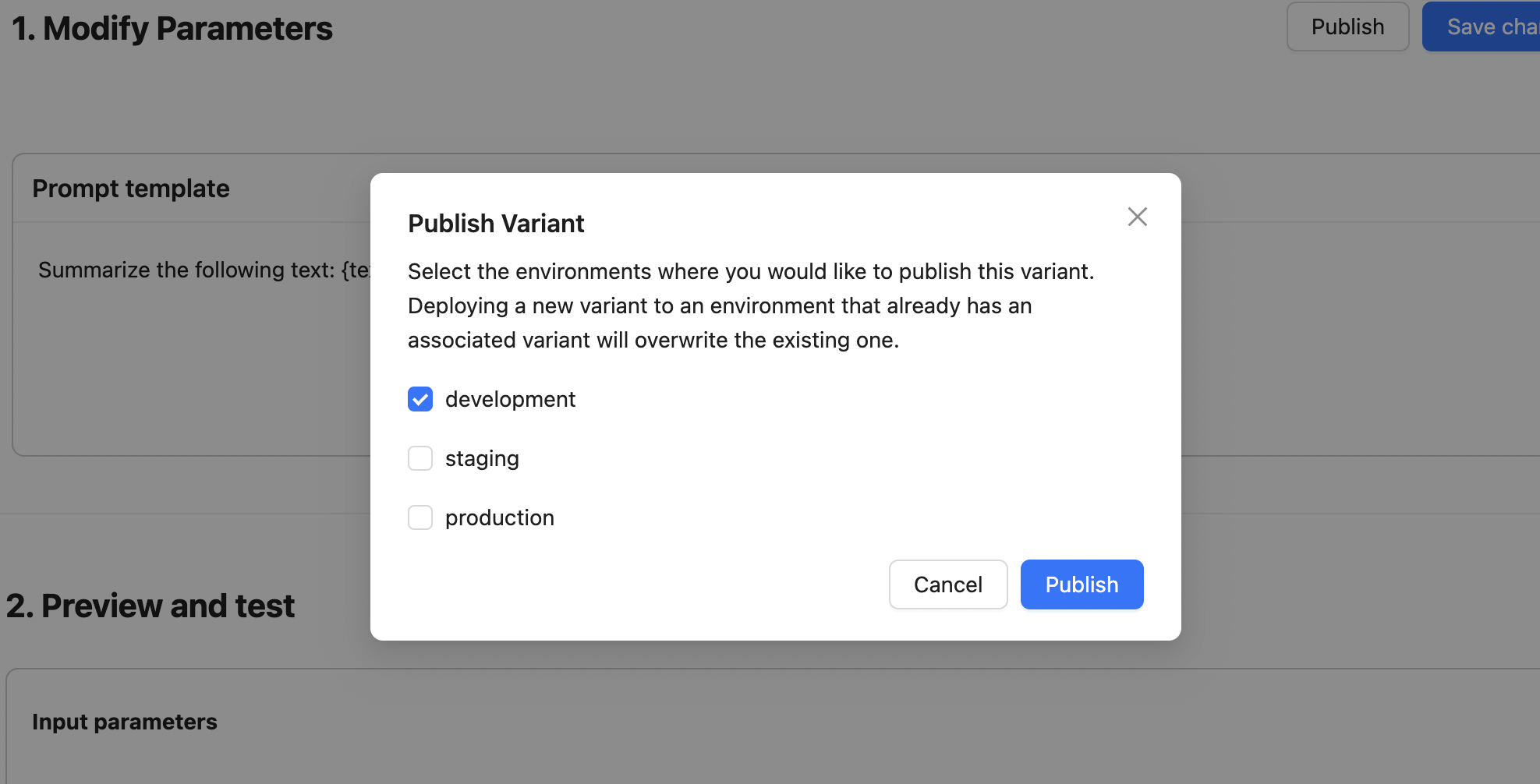

Publish Your Variant

To deploy, go to the playground, click 'Publish', and choose the deployment environment.

-

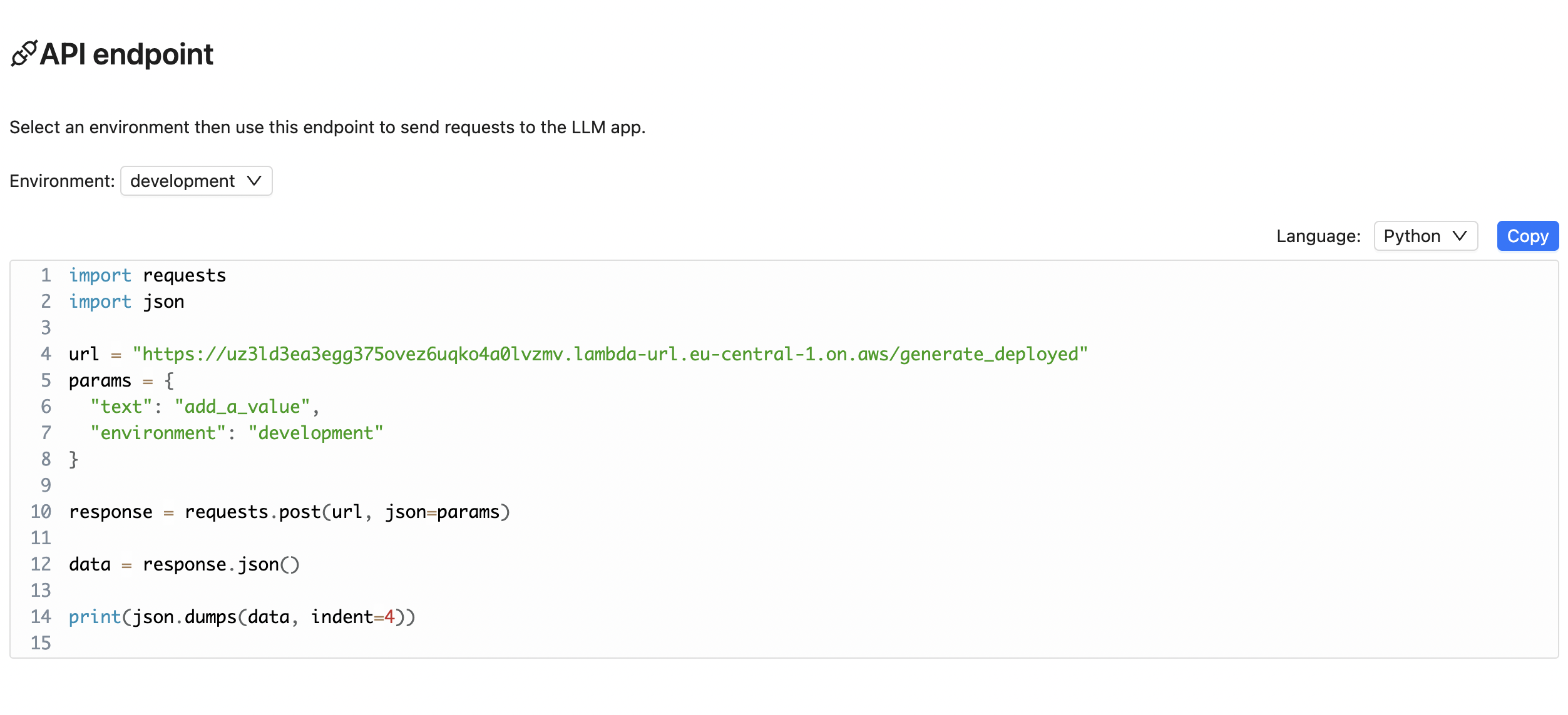

Access the Endpoints

In the 'Endpoints' section, select your environment and use the provided endpoint to interact with your LLM app.

Note that you can compare this model to other LLMs by serving other variants of the app. Just create a new app_cohere.py for instance using cohere model and serve it in the same app using agenta variant serve app_cohere.py.